Visual Perception Location Dataset of Gubeikou

Great Wall

Li, Z. H.1 Li, R. J.1,2,3* Sun, B. L.1 Li, J. H.1

1. College of Geographical Sciences, Hebei Normal

University, Shijiazhuang 050024, China;

2. GeoComputation and Planning Center of Hebei Normal University,

Shijiazhuang 050024, China;

3. Hebei Technology Innovation Center for Remote Sensing

Identification of Environmental Change, Shijiazhuang 050024, China

Abstract: The

Great Wall is a crucial visual landscape resource with multiple meanings such

as history, culture, and morphological aesthetics. Visual perception

calculation and analysis is an important approach to exploring the value of the

landscape resources of the Great Wall and presenting and explaining the

multidimensional significance of the Great Wall. In this dataset, by designing

the semantic feature point extraction and coding rules of the Great Wall

landscape system, the landscape semantic feature points are generated based on

the ontology resources of the Gubeikou Great Wall and ALOS 12.5m DEM data, and

the viewshed raster is obtained by analyzing each feature point. Then, based on

the landscape visual perception location information model, the landscape

visual perception location dataset of the Gubeikou Great Wall was constructed

using the NetCDF multidimensional data format. The dataset consists of three

parts: (1) the subset of semantic feature points data selected manually, (2)

the subset of semantic feature points selected by a program automatically, and

(3) verification points. Data subsets (1) and (2) include the vector data of

ontology features and semantic feature points of the Gubeikou Great Wall and

the visual perception location data of the Gubeikou Great Wall landscape. The

dataset is stored in .shp and .nc formats, and it consists of 64 data files

with a total data size of 6.58 GB (compressed into 1 file, 63.8 MB).

Keywords: visual perception location; landscape semantic feature points; the Great

Wall; NetCDF

DOI: https://doi.org/10.3974/geodp.2024.01.04

CSTR: https://cstr.escience.org.cn/CSTR:20146.14.2024.01.04

Dataset Availability Statement:

The dataset

supporting this paper was published and is accessible through the Digital Journal of

Global Change Data Repository at: https://doi.org/10.3974/geodb.2024.04.03.V1 or https://cstr.escience.org.cn/CSTR:20146.11.

2024.04.03.V1.

1 Introduction

Cultural

heritage is an important carrier of history and culture and a witness to the

development of human history. In recent years, the digitalization[1]

and activation[2] of cultural heritage has increasingly become a

focus of attention both domestically and internationally, while the ontological

value of cultural heritage and the experience value it brings remain at the

core. In July 2019, the 9th meeting of the Commission for Deepening Overall

Reform of the CPC Central Committee deliberated and adopted the Construction

Plan for the Great Wall, Grand Canal, and Long March National Cultural Park,

marking the official launch of the construction of the national cultural park[3].

The Great Wall is a linear cultural heritage with a large volume, long time

span, and strong ability in China, which plays an important role in promoting

economic development, cultural exchange, as well as national integration in the

Great Wall region[4]. The spatial combination and configuration of

the main landscapes of the Great Wall, such as the wall, the enemy station, the

beacon tower, the Guan fort, etc., has great aesthetic and cultural

significance, while the ingenious integration with the environmental background

further makes the Great Wall a valuable visual landscape resource. Vision is a

fundamental condition for landscape perception[5]. To realize high-quality construction and development of the

Great Wall National Cultural Park, it needs to comprehensively explore the

visual perception location that can experience the significance of the Great

Wall landscape. However, traditional field investigation methods are difficult

to comprehensively search for visual landscape perception locations with rich

semantic information, and existing research has not yet generated dataset

products with landscape visual perception location information from a resource

perspective.

The Gubeikou

Great Wall is located in the southeast of Gubeikou Town, Miyun District,

Beijing, and in the southern part of Luanping County, Chengde City. It includes

four sections of Simatai, Jinshanling, Panlongshan, and Wohushan, and it has

the characteristics of broad vision, dense enemy towers, unique landscapes,

sophisticated craftsmanship, intact original appearance, etc., with high value

in visual landscape resources. In this dataset, the landscape ontology of the

Gubeikou Great Wall and its visual perception location information were

investigated and integrated. The landscape

ontology resource of the Gubeikou Great Wall was expressed digitally in the

form of landscape semantic feature points, and its landscape semantic attribute

information was expressed by coding; Meanwhile, the visual perception location

information of the Gubeikou Great Wall was integrated and stored in the NetCDF

multidimensional raster data format to establish the visual relationship

between visual perception location and landscape semantics and to integrate

target landscape, perceived location, and visual state information. The dataset

provides data for heritage protection and integrated development of culture and

tourism, and it supports the value mining of landscape resources based on

visual perception.

2 Metadata of the Dataset

The metadata of the Landscape visual perception

location dataset of Great Wall in Gubeikou[6] is summarized in

Table 1. It includes the dataset??s full name, short name, authors, geographical

region, spatial resolution, data format, data size, data files, data publisher,

data sharing policy, etc.

3 Methods

3.1 Data Sources

(1) The data on the Great

Wall landscape resources were collected from the Ming Great

Table 1 Metadata summary of the Landscape

visual perception location dataset of Great Wall in Gubeikou

|

Items

|

Description

|

|

Dataset full name

|

Landscape visual

perception location dataset of Great Wall in Gubeikou

|

|

Dataset short

name

|

Gubeikou_LVPLM

|

|

Authors

|

Li, Z. H. JTU-3036-2023,

College of Geographical Sciences, Hebei Normal University, lizhhg@163.com

Li, R. J. JZD-9102-2024, College of Geographical Sciences, Hebei Normal

University, lrjgis@hebtu.edu.cn

Sun, B. L. JYP-6636-2024, College of Geographical Sciences, Hebei Normal

University,

stayreal9523@163.com

Li, J. H. JYP-6677-2024, College of Geographical Sciences, Hebei Normal

University,

ljh06524@163.com

|

|

Geographical

region

|

Gubeikou Great

Wall along the 5 km range grid

|

|

Spatial

resolution

|

12.5 m

|

|

Data format

|

.shp, .nc

|

|

|

|

Data size

|

63.8 MB (compressed)

|

|

|

|

Data files

|

Gubeikou Great

Wall ontology features and landscape semantic feature point vector data,

landscape visual perception location multi-dimensional raster data

|

|

Foundations

|

Natural Science Foundation

of Hebei Province(D2023205011); National Natural Science Foundation of China

(41471127)

|

|

Computing

environment

|

ArcGIS

|

|

Data publisher

|

Global Change Research Data Publishing & Repository,

http://www.geodoi.ac.cn

|

|

Address

|

No. 11A, Datun

Road, Chaoyang District, Beijing 100101, China

|

|

Data sharing policy

|

(1) Data are openly available and can be free downloaded via the

Internet; (2) End users are encouraged to use Data subject to citation; (3) Users, who are by definition also

value-added service providers, are welcome to redistribute Data subject to written permission

from the GCdataPR Editorial Office and the issuance of a Data redistribution license; and (4) If Data are used to compile new datasets, the ??ten per cent

principal?? should be followed such that Data

records utilized should not surpass 10% of the new dataset contents, while

sources should be clearly noted in suitable places in the new dataset[7]

|

|

Communication and

searchable system

|

DOI, CSTR, Crossref, DCI, CSCD, CNKI, SciEngine,

WDS/ISC, GEOSS

|

Wall

heritage data provided by the National Cultural Relics Census and the Great

Wall station volunteer geographic information platform. The

research area covers 26.49 km of the Great Wall, including 154 enemy stations,

13 beacon towers, 1 shop house, 2 water passes, and 1 Guan fort.

(2) The DEM data

was derived from NASA??s Earthdata website,

and ALOS satellite data with a spatial resolution of 12.5 m was used.

(3) Environmental

background and geographical elements were adopted from China??s 1:100,0000 basic

geographic dataset.

(4) The

verification data were collected by the project team through a field survey.

3.2 Dataset Organization

Framework

The

Landscape Visual Perception Location Model (LVPLM)[8] was employed

to organize the dataset, and each landscape semantic feature point was taken as

a basic visual perception location calculation unit. The data result was stored

in the NetCDF multidimensional data format. The organization model of the

dataset is expressed in Equation (1).

LVPLM=f

(X, Y, Points) (1)

where,

X and Y denote the location dimensions of the dataset, and the

dimension values are the X and Y coordinates of the landscape visual perception

location. Points represents the feature point dimension of the dataset,

and the dimension value is the encoding of semantic feature points. The three

dimensions of X, Y, and Points provide the

spatial location and feature point coding information, and the data variables

provide the visual perception information. Based on this, bidirectional queries

of landscape semantic feature points and their visual perception state can be

realized, thereby establishing the correlation between the landscape of the

Gubeikou Great Wall and the visual perception location.

3.3 Data Procession

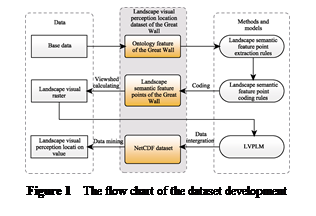

First,

landscape ontology resource data was generated based on basic data

interpretation, and the vector dataset of landscape semantic feature points of

the Gubeikou Great Wall was constructed following feature point extraction and

coding rules: including the wall line of the Gubeikou Great Wall and the point

elements of the Great Wall itself (beacon tower, etc.), and the landscape

semantic feature points in shapefile data format. Then, each landscape semantic

feature point was calculated from the perspective, and the obtained visual grid

was integrated and organized using the LVPLM, and the Great Wall landscape

visual perception location dataset was constructed based on the NetCDF

multidimensional raster data structure. Finally, the landscape visual

perception information can be extracted from the dataset. The data research and

development process is illustrated in Figure 1.

(1) The generation of

ontology data of the Great Wall landscape resources

(1) The generation of

ontology data of the Great Wall landscape resources

Based on the distribution vector of the Great

Wall Station and the attribute information of the national cultural heritage

survey data, and taking the ArcGIS Earth 3D terrain environment background as a

refe-

rence, the author cooperated

in interpreting, correcting, and generating high-precision land-

scape

ontology resource data of the Great Wall at Gubeikou. It included the wall line

data and the ontology feature point data of the Great Wall (such as enemy

towers, beacon towers, etc.). Specifically, the wall line of the Great Wall was

the center line of the wall, while the main landscape features of the enemy

tower, beacon tower, and other features were the geometric center point of the

features, and the height was set according to the height of the landscape

features.

(2) Extraction and

coding of semantic feature points of the Great Wall

Landscape semantic

feature points are the foundation of the digital representation of visual

landscape resources. According to the comprehensive idea of cartography,

landscape ontology can be abstracted as landscape semantic feature points that

can maximize the authenticity of landscape semantics[9?C11]. Then, the extraction rules of

semantic feature points were designed following the landscape resource

classification and semantic feature point selection rules proposed by Guo[12].

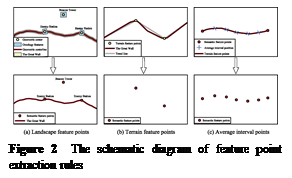

According to the

purpose of abstracting the morphological characteristics of the Great Wall as

much as possible and fully expressing the landscape semantics, semantic feature

points were extracted from the wall at an average interval of 30 m, and the

points were taken from the places where terrain features changed significantly

along the wall extension direction. The height of the feature points was taken

from the average height of the Great Wall in the Gubeikou section at 6 m.

Following the above principles, the feature points were divided into landscape

feature points, terrain feature points, and average interval points using

different extraction methods: (1) Landscape feature points, i.e., beacon towers,

enemy stations, and other Great Wall ontology features, where the point data in

the ontology features were directly used, all reserved because of the

importance of its function and visual state in principle; (2) Terrain

feature points were extracted at the point where the wall line of the Great

Wall varied significantly in the plane or elevation direction, and encrypted

points were taken in the extension direction. In the automatic extraction

method of the program, the 3D Douglas-Peucker algorithm was used to screen out

the terrain feature points on the wall line of the Great Wall[13],

and a tolerance value of 15 m was set for calculation and extraction; (3) Average

interval points, the average interval distance of 30 m was used to supplement

the points on the wall line of the Great Wall. The extraction method is

illustrated in Figure 2. According to the selection rules of semantic feature

points, the landscape semantic feature points of each section of the Gubeikou

Great Wall were extracted through artificial discriminant extraction and

programmed automatic extraction respectively.

After feature points were extracted, the hierarchical classification coding method was

employed to encode all landscape semantic feature points in the system,

and the basic coding structure was designed as 4-digit codes including section

code, location sequence code, structural order code, and element type code.

After feature points were extracted, the hierarchical classification coding method was

employed to encode all landscape semantic feature points in the system,

and the basic coding structure was designed as 4-digit codes including section

code, location sequence code, structural order code, and element type code.

(3) Landscape visual perception

calculation

Based on DEM data, all

landscape semantic feature points constituting the landscape system of the

Great Wall of Gubeikou were calculated and analyzed in sequence in terms of the

visual field. The whole research area and 10 km were calculated as visual field

ranges respectively, and then visual field grids of different visual field

ranges of each landscape semantic feature point were generated. It is a set of

raster pixels that can cover the entire calculation area. The value of each

raster pixel is 1 or 0, respectively representing the visible or invisible

state of a certain landscape semantic feature point at that location. The

spatial distribution information of visual perception location can be obtained

based on the result of landscape visual perception calculation.

(4) Landscape visual

perception location data integration based on LVPLM

According to the organization structure

of the LVPLM model, the ??raster to NetCDF?? tool in the ArcGIS multidimensional

toolbox was utilized to integrate all the visual raster layers generated by

calculation into .nc multidimensional data, and the visual perception location

dataset of Gubeikou Great Wall landscape was obtained.

4 Data Results and Validation

4.1 Data Composition

The

dataset consists of three parts: (1) The subset of semantic feature points

selected manually; (2) The subset of semantic feature points selected by

program automatically; (3) Verification points (archived in three folders

called ??Gubeikou_LVPLM_Manually?? ??Gubeikou_LVPLM_Automatically_10km??, and

??Gubeikou_LVPLM_Validation_Data??). Data subsets (1) and (2) include vector data

of ontology features and semantic feature points of the Gubeikou Great Wall

archived in the .shp format, and visual perception location data of the

Gubeikou Great Wall landscape stored in the .nc format.

4.2 Data Products

(1)

Semantic feature points of the Gubeikou Great Wall landscape

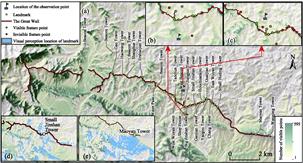

A total of 941

semantic feature points were extracted from the

Gubeikou Great Wall through manual selection, and 944 semantic feature points

were extracted through program selection. The overall spatial distribution and

extraction results of feature points (manually selected) are shown in Figure 3

(for ease of expression, the feature points on the figure are extracted and

displayed).

Figure 3 The extraction results of

semantic feature points from the Gubeikou Great Wall landscape

Each feature point

was recorded with a unique code carrying its landscape semantic information

(Table 2). Under the range scale of the current dataset, each landscape feature

was expressed by one feature point, so the structural order code (the fifth

digit encoded) was all 1. If it is necessary to add feature points to the

landscape feature in the future, it can be

sequenced according to the structural order code.

Table 2 The coding of the landscape semantic feature

points of the Gubeikou Great Wall (part)

|

Sample code

|

Section

(code)

|

Location sequence

|

Structural

sequence

|

Feature type

(code)

|

Coding semantic parsing

|

|

100111

|

Simatai (1)

|

001

|

1

|

Wall (1)

|

Wall feature point of

location No.1 in Simatai subsystem

|

|

101511

|

Simatai (1)

|

015

|

1

|

Wall (1)

|

Wall feature point of

location No.15 in Simatai subsystem

|

|

110412

|

Simatai (1)

|

104

|

1

|

Enemy Station (2)

|

Enemy Station feature point

of location No.104 in Simatai subsystem

|

|

211014

|

Jinshanling (2)

|

110

|

1

|

Shop House (4)

|

Shop House feature point of

location No.110 in Jinshanling subsystem

|

|

220613

|

Jinshanling (2)

|

206

|

1

|

Beacon Tower (3)

|

Beacon Tower feature point of

location No.206 in Jinshanling subsystem

|

|

220716

|

Jinshanling (2)

|

207

|

1

|

Guan Fort (6)

|

Guan Fort feature point of

location No.207 in Jinshanling subsystem

|

|

301215

|

Panlongshan (3)

|

012

|

1

|

Water Pass (5)

|

Water Pass feature point of

location No.12 in Panlongshan subsystem

|

|

317911

|

Panlongshan (3)

|

179

|

1

|

Wall (1)

|

Wall feature point of

location No.179 in Panlongshan subsystem

|

|

400513

|

Wohushan (3)

|

005

|

1

|

Beacon Tower (3)

|

Beacon Tower feature point of

location No.5 in Wohushan subsystem

|

|

403312

|

Wohushan (3)

|

033

|

1

|

Enemy Station (2)

|

Enemy Station feature point

of location No.33 in Wohushan subsystem

|

(2) Overall statistical

characteristics of visual perception location of the Gubeikou Great Wall

landscape

The dataset integrated

the overall visual perception location information of the landscape system.

Through data aggregation, the visual perception metric value of each spatial

location unit can be obtained, which reflects the number of overall visual

feature points of the landscape system at the visual perception location. Based

on this, the overall statistical feature analysis of landscape visual

perception can be conducted. The more the number of semantic feature points of

the visual landscape, the higher the value of the potential perceived location,

and vice versa. The spatial distribution of the number of overall feature

points in the visual perception location is illustrated in Figure 4.

(3) Visual location of

the Gubeikou Great Wall landscape

Based on the visual

perception location dataset of the Gubeikou Great Wall landscape, the following

information can be extracted: (1) The visual perception location of specific

semantic feature points, i.e., the visual distribution information of target

feature points; (2) The visual semantic feature points of a specific location,

i.e., the semantic feature points that can be seen at a specific observation

point.

The representative

landscape Maoyan Tower and Small Jinshan Tower were selected to identify the

spatial distribution characteristics of their visual locations. As shown in

Figure 4(e), the Maoyan Tower located in the Simatai section has a large and

more concentrated landscape visual location range, which is mainly distributed

in the southwest direction; However, the visual location of the Small Jinshan

Tower in the Jinshanling section is scattered inside and outside the

Great Wall, as demonstrated in Figure 4(d). Then, observation points were selected

on the south and north sides of the Jinshanling section of the Great

Wall, and the visual feature points of the area can be obtained based on the

visual perception location information contained in the dataset, as shown in

Figure 4(b) and Figure 4(c).

Figure 4 The spatial distribution of the overall

feature points in the Gubeikou Great Wall

4.3 Data Validation

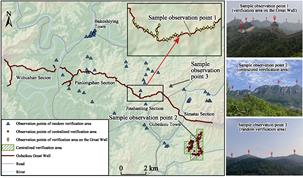

The

project team went to Gubeikou Great Wall area from August 9 to 13, 2023 to

verify the accuracy of the calculation results. Considering the characteristics

of the Great Wall landscape, the spatial distribution of visual perception

location, and the objective of accuracy verification, three types of

verification areas were set up: the verification area on the Great Wall, the

centralized verification area along the Great Wall, and the random verification

area. In the accuracy verification, the consistency between the visual

perception in real scenes and the visual perception calculation results was

evaluated, and then the accuracy of the visual perception location dataset of

the Great Wall landscape was evaluated.

(1) Selection of

verification area and data collection of observation points

The verification

area on the Great Wall was a verification area composed of observation points

located on the Great Wall. The verification area was constructed by setting

observation points in the Jinshanling section. The verification area along the

Great Wall was selected in the south of the Simatai section, and a total of 11

regular squares of 500 m??500 m were set up. Observation points in the random

verification area were randomly selected along the traffic lines and villages

on both sides of the Great Wall at Gubeikou (Figure 5).

Figure 5 The

distribution of verification areas and observation points in the Gubeikou Great

Wall

Table 3 The field observation information at the Gubeikou

Great Wall (part)

|

Time

|

Location

|

Reachable

|

Code

|

Latitude

(N)

|

Longitude

(E)

|

Factors

affecting visibility

|

Semantic feature

point code of visible features

|

Number of visible

features

|

|

2023/8/9 12:40

|

On the

wall

|

Y

|

J16

|

40??40ʹ44.59ʺ

|

117??15ʹ4.10ʺ

|

None

|

206112; 205512; 205012;

204712

|

4

|

|

2023/8/9 11:41

|

On the

wall

|

Y

|

J23

|

40??40ʹ39.20ʺ

|

117??14ʹ44.75ʺ

|

Terrain

|

206712; 207212; 207912;

211014; 211112; 211512; 212112; 212313; 213212; 213612; 214012; 214312; 214812;

215212; 215712

|

15

|

|

2023/8/9 12:14

|

On the

wall

|

Y

|

J19

|

40??40ʹ40.36ʺ

|

117??14ʹ59.27ʺ

|

Vegetation

|

201212; 202112; 202312;

202912; 203612; 204712; 205012; 205512

|

8

|

|

2023/8/11 15:49

|

Beyond the wall

|

Y

|

A742-B19

|

40??40ʹ24.18ʺ

|

117??16ʹ51.20ʺ

|

Vegetation;Weather

|

114212; 115112; 115712;

113612; 112812

|

5

|

|

2023/8/12 13:54

|

Beyond the wall

|

Y

|

A1059-1

|

40??42ʹ3.59ʺ

|

117??14ʹ25.72ʺ

|

Vegetation;

Buildings;

Terrain

|

None

|

0

|

|

2023/8/12 10:54

|

Beyond the wall

|

Y

|

A420-7

|

40??38ʹ37.25ʺ

|

117??17ʹ23.94ʺ

|

Vegetation;

Buildings

|

100512; 102212; 103812

|

3

|

|

2023/8/11 11:19

|

Beyond the wall

|

Y

|

A735-1

|

40??40ʹ15.72ʺ

|

117??14ʹ24.61ʺ

|

Weather

|

210312

|

1

|

|

2023/8/10 17:11

|

Beyond the wall

|

N

|

A1237-B10

|

N/A

|

N/A

|

N/A

|

N/A

|

N/A

|

The project team

members first went to the verification area to collect observation points. If

the target point was not reachable due to objective factors such as terrain,

land cover, or personnel safety, the point was recorded as inaccessible; If the

target point was reachable, after the observation point was reached, the

current coordinates were located through a handheld GPS instrument, and the

visual situation of the single landscape of the Great Wall at the current point

was recorded by taking photos (the photos contained the azimuth and other

information), the 3D terrain view and the vector

data of the single landscape in the verification area were loaded with

OvitalMap software, and the semantic feature point code of all visible

landscape in each photo was compared for identification. Finally, the above

information, observation point code, factors affecting the visibility and other

information were filled into the preset field observation information

collection form for submission and summary (Table 3). After data collation, a

total of 137 effective observation points were obtained in the verification

work, including 27 observation points in the verification area onthe Great

Wall, 60 observation points in the centralized verification area, and 50

observation points in the random verification area.

(2) Accuracy

verification methods and results

The quantitative

and content consistency of all observation points can be obtained by comparing

the matching degree between the field observation data of each observation

point and the feature point information of the visible single landscape (such

as beacon tower, enemy station, etc.) at the corresponding location in the

dataset in terms of the number of visible feature points and the corresponding

relationship between the visible feature points[8]. Then, the

dataset validation results were obtained by calculating the average

quantitative and content fit according to different validation area types

(Table 4). The average total quantity coincidence and content coincidence were

76.37% and 70.69%, respectively. Meanwhile, it was found that DEM accuracy,

landscape preservation status, and construction and vegetation occlusion all

affected the accuracy of the dataset. The verification results suggest that the

dataset results are consistent with the field observation results, and the

landscape visual perception location information of the dataset has high

reliability.

Table 4 The verification results of the Gubeikou

Great Wall LVPLM dataset

|

Type of verification area

|

Number of observation points

|

Number of fit averages

|

Content fit average

|

|

Verification area on

the Great Wall

|

27

|

94.55%

|

76.32%

|

|

Centralized verification area

|

60

|

64.28%

|

62.29%

|

|

Random verification area

|

50

|

81.07%

|

77.73%

|

|

Overall

|

137

|

76.37%

|

70.69%

|

5 Discussion and Conclusion

In

this dataset, the landscape resources of the Gubeikou Great Wall were digitally

expressed in the form of vector geographic feature points, and the landscape

semantic information of each feature point was recorded by coding, so as to

obtain landscape ontology data of the Gubeikou Great Wall. Based on the raster

data obtained from perspective analysis and the calculation of landscape

semantic feature points, the location information of landscape visual

perception was organized and stored in an integrated way based on the LVPLM

model, and the correlation between visual perception location and landscape

semantic feature points was established in the NetCDF multidimensional data

format. In this approach, the bidirectional query from feature points to their

visual location and from specific locations to corresponding visual feature

points can be realized. Field verification analysis results indicate that the

dataset??s results have good reliability. Therefore, this dataset not only

provides semantic feature point data that can characterize the landscape system

characteristics of the Gubeikou Great Wall for researchers engaged in

landscape planning and Great Wall research, but also provides multi-dimensional

landscape visual perception location raster data with encoded semantic sequence

information. With this dataset, further landscape visual perception information

mining can be conducted to evaluate visual perception effects, select potential

high-quality landscape perception locations, analyze different locations of the

visual Great Wall landscape combination mode, and perform other application

practices, thereby assisting in high-quality tourism spatial planning and the

construction of the Great Wall National Cultural Park.

Author Contributions

Li,

Z. H. successfully implemented the algorithm for automatic feature point

extraction and data integration, and wrote the data paper. Li, R. J. formulated

the overall development plan for the dataset and designed its organizational

structure, while also being responsible for revising and approving the data

paper. Sun, B. L. participated in producing and validation of the dataset. Li,

J. H. contributed to designing and validating the data validation.

Conflicts

of Interest

The

authors declare no conflicts of interest.

References

[1]

Ognjanović

Z,Marinković B, Šegan-Radonjić M, et al. Cultural Heritage Digitization

in Serbia: Standards, Policies, and Case Studies [J]. Sustainability, 2019, 11(14): 3788.

[2]

Wu, B. H., Wang, M. T. Heritage

revitalization, original site value, and presentation methods [J]. Tourism Tribune,

2018, 33(9): 3?C5.

[3]

Li, F., Zou, T. Q. National

Cultural Park: logic, origins and implications [J]. Tourism Tribune, 2021, 36(1): 14?C26.

[4]

Cheng, R. F., Xu, C. C. A study

on the spatial structure layout and development strategy of the cultural

tourism belt of the Great Wall [J]. Economy

and Management, 2022, 36(1): 58?C64.

[5]

Li, S., Zhu, C. Resarch on

landspace evaluation of engineering universities based on visual perception

[J]. Areal Research and Development,

2022, 41(3): 49?C54, 74.

[6]

Li, Z. H., Li, R. J., Sun, B.

L., et al. Landscape visual

perception location dataset of Great Wall in Gubeikou [J/DB/OL]. Digital Journal of Global Change Data

Repository, 2024. https://doi.org/10.3974/geodb.2024.

04.03.V1. https://cstr.escience.org.cn/CSTR:20146.11.2024.04.03.V1.

[7]

GCdata PR Editorial Office.

GCdataPR data sharing policy [OL]. https://10.3974/dp.policy.2014.05 (Updated in 2017).

[8]

Sun, B. L., Guo, F. H., Li, R.

J., et al. Linear cultural heritage landscape visual perception location

model and demonstration. Progress in Geography, 2024, 43(1): 80?C92.

[9]

Ye, Y. J., Li, R. J., Fu, X.

Q., et al. A DEM based semantic

perception of culture landscape in the tourist destination of the western Qing

tombs [J]. Journal of Geo-information

Science, 2012, 14(5): 576?C583.

[10]

Li, R. J., Gu, F., Guo, F. H., et al. Cultural landscape perception

degree model and perception function division based on DEM of traffic line: a

case study of Zijingguan Great Wall [J]. Scientia

Geographica Sinica, 2015, 35(9): 1086?C1094.

[11]

Guo, F. H., Cheng, L. P., Fu,

X. Q., et al. Calculation model of

tourist??s landscape perception degree within destination based on grid data

structure [J]. Areal Research and

Development, 2018, 37(1): 125?C130.

[12]

Guo, F. H., Sun, B. L., Li, J.

H., et al. The Great Wall visual

landscape resources and its perception location calculation method [J]. Geography and Geo-Information Science,

2022, 38(6): 9?C16.

[13]

Fei, L. F., He, J., Ma, C. Y., et al. Three dimensional Douglas-Peucker

algorithm and the study of its application to automated generalization of DEM

[J]. Acta Geodaetica et Cartographica

Sinica, 2006(3): 278?C284.